| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Kola Adegoke | -- | 8190 | 2025-07-15 01:13:17 | | | |

| 2 | Catherine Yang | -3032 word(s) | 5158 | 2025-07-15 03:09:20 | | | | |

| 3 | Jason Zhu | -3 word(s) | 5155 | 2025-07-16 04:02:43 | | |

Video Upload Options

Interoperability in digital health refers to the seamless, secure, and scalable exchange of health information between care environments and digital platforms. Being a central pillar for the integration of wearable sensors, AI-based diagnostics, clinical and non-clinical workflows for both Digital Therapeutics and ubiquitous health (uHealth), mobile health applications, and virtual coaching applications, interoperability is done using standards such as HL7 FHIR, TEFCA, and SMART on FHIR, which provide shared protocols for information exchange, protection, and semantic harmonization. This entry describes fundamental ideas, new governance paradigms, technical architectures, and regulatory issues required for realizing interoperable health ecosystems for personalized, preventive, and participatory care. The entry describes barriers in the form of fragmentation, data silos, and ethical issues, while identifying enablers such as federated learning, blockchain, and FAIR data principles.The content is based on a structured narrative review registered with OSF (DOI: 10.17605/OSF.IO/PNKG4) and a preprint version publicly available via Preprints.org.

1. Introduction

The integration of digital technologies into healthcare, encompassing digital therapeutics and ubiquitous health (uHealth) systems, holds substantial promise for transforming care delivery, improving outcomes, and enabling proactive disease management [1][2]. These innovations support scalable and personalized interventions, particularly for chronic conditions and underserved populations. Digital therapeutics have also emerged as tools for real-time evidence generation and precision medicine [3][4].

However, realizing full potential hinges on overcoming the core challenges related to interoperability, data privacy, ethical compliance, and equitable access [5][6][7]. Among these, interoperability is foundational. Despite progress, health systems continue to struggle with fragmented data architectures that hinder the seamless exchange of health information (HIE) across platforms and providers.

To address these barriers, standards such as HL7 Fast Healthcare Interoperability Resources (FHIR) and Trusted Exchange Framework and Common Agreement (TEFCA) have been developed. HL7 FHIR offers syntactic and semantic interoperability through modular data structures and standardized APIs, whereas TEFCA establishes policy and trust frameworks to facilitate cross-organizational data exchange in the United States [8][9][10].

Although FHIR adoption is expanding globally, particularly in mobile applications and electronic health records, its implementation remains inconsistent, often due to local integration challenges, a lack of data normalization, and semantic discrepancies [5][8]. Similarly, TEFCA underscores the importance of centralized governance but faces limitations stemming from its voluntary participation model and regulatory fragmentation [9].

This paper presents a structured narrative review and policy analysis of the interoperability frameworks underpinning the deployment of digital therapeutics and uHealth systems. Focusing on HL7 FHIR and TEFCA, this examination of technical standards, governance models, and implementation case studies highlights enablers and persistent gaps in achieving scalable, ethical, and equitable digital health infrastructures.

2. Materials and Methods

2.1. Search Strategy and Scope

This structured narrative review synthesizes interdisciplinary research on the interoperability frameworks that underpin the deployment of uHealth and digital therapeutics. Although this structured narrative review was not conducted in full accordance with PRISMA guidelines, a modified PRISMA flow diagram is included to transparently illustrate the article identification, screening, and selection process. The review adhered to systematic principles while maintaining methodological flexibility to capture emerging technologies, evolving standards, and regulatory frameworks more effectively.

Electronic searches were conducted across PubMed, Scopus, IEEE Xplore, and ACM Digital Library using combinations of keywords, including:

- HL7 FHIR, TEFCA, digital therapeutics, uHealth, artificial intelligence in healthcare, health data privacy, GDPR, blockchain, federated learning, interoperability.

Additionally, manual searches of the reference lists of the selected literature were conducted to identify supplemental studies. Discussions with professionals and domain experts, particularly in digital health policy, health IT, and interoperability architecture, further guided the identification of relevant sources. The authors also drew upon their interdisciplinary expertise and prior experience in health informatics, mHealth governance, and the development of digital therapeutics.

A summary of the search scope and sources is provided in Table 1.

2.2. Eligibility Criteria

Studies and documents were included if they met the following criteria:

- Peer-reviewed publications, policy documents, or implementation frameworks,

- Published between January 2000 and July 2025,

- Written in English,

- Addressed at least one of the following themes:

- Interoperability standards (e.g., HL7 FHIR, SMART on FHIR, and SNOMED CT)

- Evaluation of digital therapeutics, AI-powered systems, or mHealth platforms,

- Governance and privacy regulations (e.g., HIPAA, GDPR, TEFCA),

- Ethical, legal, or infrastructural dimensions of scalable digital health.

Exclusion criteria included:

- Non-healthcare or commercial technology studies,

- Publications not in English,

- Opinion pieces or commentaries lacking technical or policy analysis.

2.3. Thematic Organization and Analysis

The selected materials were organized thematically to support a comparative analysis of interoperability in practice, focusing on the following:

- Technical Standards and APIs,

- Data Governance and Legal Frameworks,

- Real-World Implementation and Use Cases.

This organization facilitated the review, enabling the identification of gaps, enablers, and convergence opportunities at the intersection of the technical, regulatory, and ethical domains in digital health ecosystems.

Table 1. Search Strategy and Article Selection Summary.

|

Parameter |

Details |

|

Type of Review |

Structured Narrative Review |

|

Timeframe Covered |

January 2000 – July 2025 |

|

Keywords Used |

HL7 FHIR, TEFCA, digital therapeutics, uHealth, AI in healthcare, health data privacy, GDPR, blockchain, federated learning, interoperability |

|

Databases Searched |

PubMed, Scopus, IEEE Xplore, ACM Digital Library |

|

Supplementary Sources |

Manual reference screening, expert consultations, and authors' domain expertise |

|

Language Restrictions |

English only |

|

Types of Articles Included |

Peer-reviewed original research, regulatory frameworks, policy reports, and implementation case studies |

|

Inclusion Criteria |

Articles on digital health standards, interoperability architecture, ethical governance, or implementation |

|

Exclusion Criteria |

Non-healthcare applications, non-English, opinion pieces, or lacking empirical/policy/or technical analysis |

|

Search Methodology Notes |

Boolean logic (AND, OR), MeSH terms, filters by publication date, peer-review status, relevance |

PRISMA-style Article Selection Flow

|

Stage |

Count (n) |

|

Records identified via databases |

457 |

|

Additional sources identified |

38 |

|

Total identified |

495 |

|

Duplicates removed |

25 |

|

Records after duplicates removed |

470 |

|

Title and abstract screened |

470 |

|

Records excluded |

376 |

|

Full-text articles assessed |

94 |

|

Full-text articles excluded |

34 (Non-technical, unrelated, insufficient data) |

|

Included in the final synthesis |

60 (Technical research, frameworks, policy reports) |

Note: Although this structured narrative review did not strictly follow PRISMA guidelines, a modified PRISMA-style flow chart is provided to document the identification, screening, and selection process transparently. While a total of 80 references are cited, only 60 met the criteria for inclusion in the synthesis. The rest were used to support background, theoretical framing, and context.

3. Technical Interoperability Frameworks

3.1. Interoperability as a Foundational Pillar

Interoperability refers to the ability of different health information systems to exchange, interpret, and utilize data, which is crucial for realizing the full potential of digital therapeutics and uHealth systems [11][12]. Without seamless data exchange, the vision of integrated patient-centric care remains elusive. Technologies such as telehealth, remote monitoring, and wearable devices generate vast volumes of real-time health data. However, without effective integration into existing health information infrastructure, these data remain underutilized [13][14].

Interoperability not only supports coordinated care but also drives innovation, enabling cross-platform applications that leverage diverse datasets [15]. However, widespread adoption has been impeded by the following:

- Technical barriers, such as incompatible data formats and communication protocols [14];

- Organizational challenges, including stakeholder resistance and misaligned incentives [16];

- Semantic misalignment stems from varied use of medical terminologies and coding standards.

These challenges necessitate a multifaceted response that involves open standards, robust governance mechanisms, and global collaboration. In the U.S. and elsewhere, policy reforms remain crucial for strengthening interoperability infrastructure [17].

3.2. HL7 FHIR and SMART on FHIR

The HL7 Fast Healthcare Interoperability Resources (FHIR) standard has emerged as a cornerstone for scalable API-driven health data exchange. FHIR's modular resource structure and RESTful architecture of FHIR support integration across EHRs, mobile apps, and cloud-based services [18]. One widely adopted use case is Apple Health's integration with FHIR and SMART on FHIR apps, enabling patient-mediated data sharing and health monitoring [19].

Although FHIR is technically robust, challenges remain in achieving semantic interoperability, as inconsistent data encoding across systems hinders machine-readable interpretation [20][21]. SMART enhances FHIR by adding secure, app-level access and standardized OAuth 2.0 protocols, making it easier for third-party apps to connect to diverse EHR platforms [22].

3.3. Syntactic vs. Semantic Interoperability

Syntactic interoperability refers to the content and format of information exchanged, whereas semantic interoperability provides a shared understanding of the meaning of that information. Standards such as SNOMED CT, LOINC, and the ICD classification family form the basis for semantic alignment between distinct health systems [22][23]. However, aligning such terminologies within local implementations remains a complex task. LOINC, for example, is highly streamlined for laboratory information, whereas SNOMED CT spans a broader range of clinical concepts; however, the alignment between them often lacks accuracy and consistency [24].

Recent ICD-10 to ICD-11 changes provide enhanced semantic granularity and digital readiness; however, such a transition poses interoperability concerns owing to differences in structure, post-coordination logic, and version mapping fidelity [25]. The application of complex medical vocabularies in interoperability standards, such as HL7 FHIR, requires precise ontological modeling. For example, Martínez-Costa et al. proposed an ontology-based approach to align clinical information between SNOMED CT and FHIR, resulting in improved semantic accuracy and computability within health data exchanges [26].

These examples illustrate the necessity of ongoing efforts to align widely accepted terminology, particularly within the modular architectures of new interoperability standards. Actual semantic interoperability demands technical mapping and coordinated actions by institutions, vendors, and regulators.

Table 2. Comparison of Key Interoperability Frameworks.

|

Framework |

Adoption Scope |

Primary Purpose |

Challenges |

|

FHIR |

Widely adopted globally in apps, EHRs |

Standardized API-based data exchange |

Semantic consistency, implementation variability |

|

TEFCA |

US-centric; early-stage voluntary implementation |

Trust framework for nationwide HIE |

Limited enforcement, optional participation |

|

SNOMED CT |

Global clinical coding standard, widely adopted |

Terminology standard for semantic interoperability |

Mapping to local vocabularies, licensing |

This table compares the Standards for uHealth Interoperability.

Table 3. Interoperability Frameworks Comparison.

|

Framework |

Standardization |

Scope |

Adoption Rate |

Key Barriers |

Region |

|

HL7 FHIR |

Yes |

Clinical |

High |

Semantic harmonization |

Global |

|

TEFCA |

Partial |

Clinical/Admin |

Low–moderate |

Voluntary adoption |

USA |

|

SNOMED CT |

Yes |

Clinical vocabulary |

Widespread |

Licensing/cost |

Global |

|

IHE |

Yes |

Data exchange protocols |

Moderate |

Complexity |

EU |

This table compares the core frameworks, including HL7 FHIR, TEFCA, SNOMED CT, and IHE, in terms of scope, adoption, barriers, and regions of influence.

4. Governance and Policy Models

4.1. TEFCA (U.S. Trust Framework)

The United States created the Trusted Exchange Framework and Common Agreement (TEFCA) to enable nationwide secure exchange of health information through Qualified Health Information Networks (QHINs). It offers an underlying technical and governance infrastructure to achieve interoperability between fragmented systems [27]. As implementation extends further, TEFCA will cooperate with standards such as FHIR and SMART to standardize the terminologies and API use of national health data infrastructure [21][28].

Even though TEFCA holds promise, its implementation has been gradual, in part because participation has remained optional, timelines have been misaligned, and integrating pre-existing Health Information Networks within the QHIN framework has proven to be challenging [29]. This is particularly true in resource-poor settings or rural health organizations that cannot meet technical thresholds [30]. Beyond that, as Szarfman et al. further wrote, uniform policies are not sufficient by themselves unless stakeholders agree to shareable medical data architectures that support day-to-day operations [31].

Standardization approaches using FHIR pipelines (as in Rigas et al. [32] and Marfoglia et al. [33]) offer solutions for bridging the heterogeneity in system architectures, particularly when semantic alignment is required for AI applications. However, these advances in structured data modeling must be implemented within enforceable national frameworks, something TEFCA has yet to deliver.

4.2. HIPAA, GDPR, and International Contrasts

Data governance frameworks, such as HIPAA (U.S.) and GDPR (EU), represent two distinct paradigms: entity-based and rights-based regulation. HIPAA protects health data within the scope of covered entities, whereas GDPR enforces data minimization, consent rights, and individual control over personally identifiable information [34][35]. This divergence has profound implications for AI-enabled digital therapeutics. GDPR's stance on secondary use and anonymization can conflict with AI's dependence on large and diverse datasets [36].

Furthermore, Amar et al. highlighted semantic inconsistencies within electronic health records (EHRs) that are amplified when policies restrict data transformation workflows [37].

Meanwhile, HIPAA has gaps, particularly around telehealth platforms, mobile apps, and non-covered digital health actors that fall outside its jurisdiction [30]. As Szarfman et al. argue, U.S. data-sharing capabilities often stall not due to technical incapacity, but due to a lack of regulatory alignment between clinical and administrative systems [31].

These tensions create trade-offs between innovation, ethics, and interoperability, complicating the development of cross-jurisdictional health AI models. They also affect how developers construct secure workflows for identity matching, patient consent, and data traceability.

Table 4. Policy vs. AI Compatibility.

|

Policy Framework |

AI Compatibility |

Secondary Use Allowed |

Data Minimization Conflict |

Interoperability Provisions |

|

GDPR |

Limited |

No |

Yes |

Conditional |

|

HIPAA |

Moderate |

Yes |

No |

Yes |

|

TEFCA |

High |

Yes |

No |

High |

This table summarizes the conflicts and alignments between the major regulatory models (GDPR, HIPAA, and TEFCA) and the demands of AI-based digital health, including the use of secondary data and data minimization.

4.3. WHO Global Strategy on Digital Health

The WHO Global Strategy on Digital Health 2020–2025 emphasizes equitable access, open standards, and country-led digital transformation, particularly in low- and middle-income countries (LMICs) [38]. It promotes interoperable architectures based on global standards, such as HL7 FHIR, and advocates adaptive policies, ethical AI, and infrastructure development. This approach addresses the gaps that exist even in high-income countries.

Ricciardi, Celsi, and Zomaya emphasize the need for AI governance frameworks rooted in transparency and cross-sector cooperation [39]. In home-based care, ethical challenges associated with IoT-driven digital twins highlight the complexity of consent and system liability [40].

Frameworks such as those developed by Marfoglia et al. and Rigas et al. complement WHO's vision by building modular and scalable FHIR infrastructures to support global interoperability [32][33].

However, as Watson et al. highlighted through casework in the U.S. Department of Transportation, systemic change is slow unless digital pilots are integrated with national certification bodies [41]. Ultimately, meaningful global interoperability requires policy harmonization, robust governance, and commitment to equity—a message echoed across the regulatory and technical literature.

5. Case Study: Veterans Health Administration (VHA)

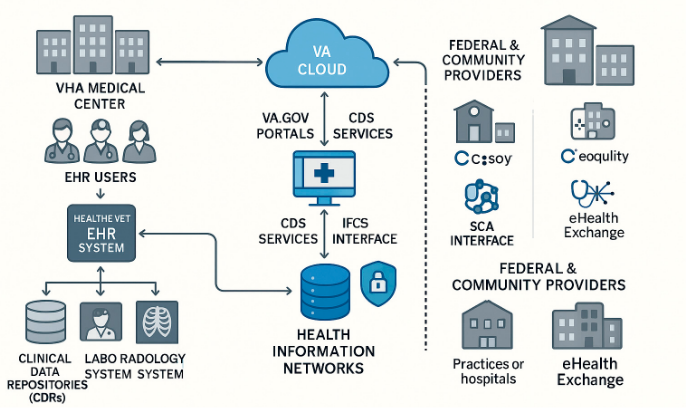

The Veterans Health Administration (VHA) is one of the largest integrated healthcare systems in the United States. Its ongoing digital upgrade, especially from the legacy VistA system to Cerner Millennium EHR, is a prime example of national-level efforts to achieve semantic interoperability, FHIR adoption, and the implementation of AI-enabled tools [42]. This example illustrates the intersection of large-scale governance, a regulation-compliant AI environment, and highly complex system integration.

The broader promise of digital health and lessons from the previous decade find their place within the VHA's policy-congruent innovation and legacy modernization approach [43]. The system's evolution mirrors agency-wide efforts to standardize clinical terminologies, centralize veteran records, and implement FHIR APIs across the agency [44]. VHA's modernization has, in particular, adopted the principle of Living Labs—operational test sites where AI-powered, interoperable solutions are tested and refined through an iterative cycle to enhance readiness and governance [43].

5.1. Goals and Results of Interoperability

One of the fundamental pillars of VHA modernization is interoperability between the VHA and DoD, as well as cross-VHA facility data harmonization. The HL7 FHIR protocols have become the focal point for enabling veteran-controlled data, particularly in areas such as drug tracking, diagnostics, and patient identity confirmation [44]. In conjunction with data exchange, FHIR-based CDS (Clinical Decision Support) tools are being established to make real-time, context-aware clinical information a workflow affordance, a plan that Braunstein highlights as a means to build scalable, patient-level innovation [44].

Snyder et al. (2024) reported that Health Information Exchange (HIE) tools improved the precision of medication reconciliation and reduced duplicative data between VHA sites. However, they identified the persistence of issues, including inconsistency in terminology, variability in site readiness, and insufficient coherence in the user interface, which fail to meet the needs of clinicians [45]. Denney (2015) previously demonstrated that structured fields and clinical decision support tools (CDS tools) reduce medication errors; however, users seek easier dashboarding and alert handling [46].

Braunstein (2022) highlighted the transformative capacity of FHIR-based APIs to enable scalable cross-platform interoperability, particularly when paired with adaptive CDS tools and mobile health applications [44]. Sarkar (2022) similarly indicated that extracting actionable information from raw health data depends not only on technology but also on workflow alignment and data governance, both of which are current areas of focus for the VHA [47].

VHA testbeds are also examples of other organizations that emulate. Gilbert et al. (2025) advocated regulation-compliant Living Labs to support the iterative refinement of AI tools within real-world hospital contexts, similar to VHA's pilot phases of deployment [44].

5.2. Implementation Failures and Limitations

Despite its grand ambitions, Cerner Millennium has experienced significant implementation failures, including cost overruns, delays, and dissatisfaction among clinicians. Laster (2024) documented how inadequate training, typical system failures, and poorly designed workflow nullified clinician trust, particularly among the earliest implementation sites [48].

As of January 2024, only select VA Medical Centers had completed the transition, resulting in a fragmented network and concrete interoperability silos. Rollout readiness differences, along with different behavioral health data policies between VA and DoD, complicate longitudinal patient identity tracking [45] . However, initial technical accomplishments, such as eliminating drug redundancy and standardizing clinical note writing, point to the VHA system's promise to deliver its FHIR-based vision when paradigms of governance and usability-based designs are solidified. As indicated by Holmgren et al. (2023), policy will need to remain closely attuned to clinical usability to achieve interoperability that yields meaningful improvements in relevant health outcomes [49]. Figure 1. Architecture of the Veterans Health Administration's EHR and External Integration Points for Interoperability.

Figure 1. VHA EHR system architecture showing integration between clinical systems, VA cloud services, and external health networks via standardized interfaces for secure data exchange.

6. Emerging Technologies for Interoperability

6.1. AI/ML and NLP for Data Harmonization

Artificial Intelligence (AI) and Natural Language Processing (NLP) are transforming the interoperability of health data by converting unstructured clinical narratives into format-agile forms that are exchangeable on FHIR-based platforms. Platforms such as AWS Comprehend Medical achieved entity identification accuracies between 80% and 96%, which paves the way for more meaningful integration of clinical notes into electronic platforms [50]. Similarly, large NLP models, such as Med-PaLM 2 from Google, have demonstrated 85.4% accuracy on clinical question-answering tasks, which holds promise for abstracting large datasets from Electronic Health Records (EHRs) to support clinical decision-making tasks [51].

However, AI programs are still imperfect. Typical pitfalls include hallucinations regarding medical truths, bias towards certain fields, and poor cross-institutional generalizability, most notably, on imaging-based models [52]. To compensate for this, developers insist on local fine-tuning of datasets and institution-level workflow integration.

In addition to NLP, AI has shown a remarkable impact on risk stratification, diagnosis, and customized therapy. For low- and middle-income countries (LMICs), AI has played a role in the large-scale scaling of oncology diagnosis and improved treatment planning in low- and middle-income countries (LMICs) [53]. For implementation in hospitals, AI is becoming increasingly widespread to streamline workflows and automate diagnosis, with refinements in image interpretation and efficiency observed during triage [54].

As AI interfaces with devices neurally, there are intriguing possibilities for treating neurological diseases using real-time, patient-individualized adjustments of therapy [55]. AI-powered virtual care and chatbot providers continue to advance, and physician-patient communication, empathy simulation, one-touch triage, and consultations are enhanced [56].

The remote patient monitoring (RPM) system takes it one step further by continuously tracking the patient's vitals, enabling earlier intervention and reducing the clerical burden on clinicians. This reduces the time clinicians spend on the involved care scenarios [57]. Globally, AI has scaled up telemedicine programs in LMICs, improving access, autonomy among patients, and system productivity [58].

Table 4. Integration of AI, IoT, and Cloud Computing in Digital Health: Key Benefits and Use Cases.

|

Technology Area |

Application in Healthcare |

Benefits |

|

Artificial Intelligence (AI) |

Pattern recognition, diagnostics, predictive analytics, clinical decision support, automation |

Improves diagnostics, treatment planning, personalized care, and reduces workload |

|

Remote Patient Monitoring (RPM) |

Vital signs tracking, chronic disease management, and early detection |

Enhances care at home, reduces hospitalizations, and increases convenience |

|

Telemedicine & Virtual Assistants |

Real-time consultations, symptom triage, AI-powered Q&A, medication adherence support |

Expands access, improves patient satisfaction, saves clinician time |

|

Big Data & Cloud Computing |

Health data aggregation, analytics, storage, and sharing |

Enables large-scale modeling, predictive insights, and personalized medicine |

|

Internet of Things (IoT) |

Wearables, biosensors, and continuous monitoring |

Supports early detection, chronic care management, and real-time alerts |

|

Interoperability Frameworks (FHIR, TEFCA) |

Data sharing standards, modular transformation pipelines |

Enables system integration, standardization, and scalable deployment of uHealth tools |

|

Cancer Care & Precision Medicine |

AI in radiology, oncology decision support, and genetic risk assessment |

Early detection, targeted treatment, enhanced outcomes, improved equity |

|

Administrative Automation |

Scheduling, billing, claims processing, and workflow optimization |

Reduces errors, enhances efficiency, and frees clinician time |

|

Ethical & Governance Considerations |

Algorithmic bias, privacy, transparency, and regulatory frameworks |

Ensures safe, equitable, and trustworthy AI deployment |

Table 4 summarizes the convergence of the technologies discussed in this study, highlighting their applications, benefits, and system-level implications.

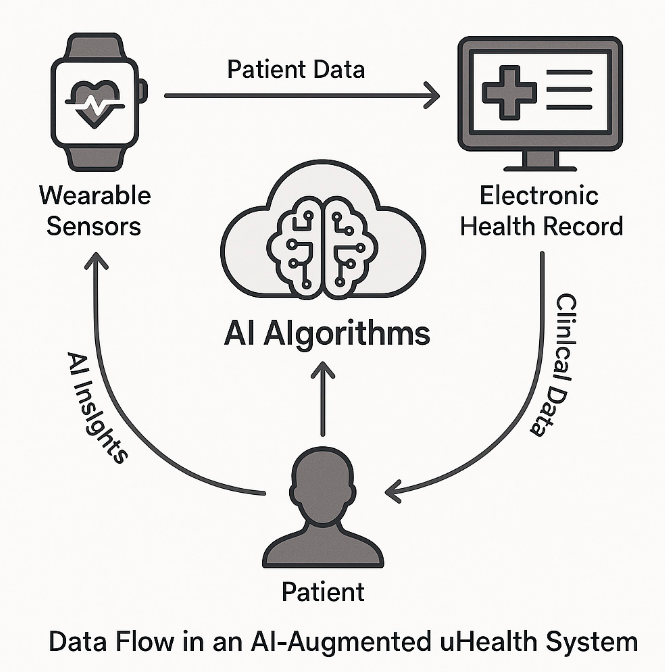

Figure 2. Data Flow in an AI-Augmented uHealth System.

This figure illustrates how AI algorithms process patient data from wearable sensors and electronic health records (EHRs) to generate personalized insights, which are then relayed back to both the patient and the monitoring devices in a continuous feedback loop.

6.2. Blockchain and Distributed Ledgers

Blockchain

Blockchain technologies offer a secure and transparent platform for managing health data, utilizing tamper-evident audit trails, decentralized trading, and patient-centered consent structures [59]. For national health infrastructure, blockchain has been proposed as a method to enhance data integrity and interoperability; however, practical implementations have yet to overcome challenges, such as latency, energy costs, and compliance with jurisdiction-specific regulations.

Hybrid architectures, which integrate on-chain verification and off-chain storage of data, provide a practical solution that balances openness and performance demands [60].

6.3. Federated Learning and Zero Trust Architectures

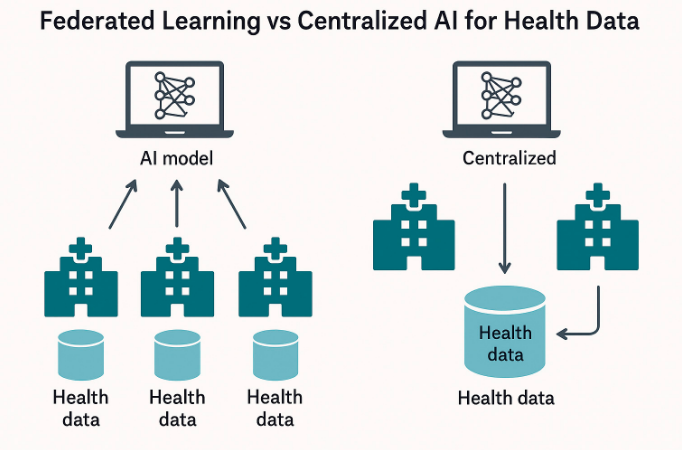

Federated Learning (FL) enables collective AI model learning without sharing centralized data, a distinct feature that facilitates interoperability within privacy-sensitive environments. In a multicenter clinical trial, an FL method was employed to achieve 88% accuracy in detecting diabetic retinopathy while maintaining compliance and ensuring institution-level data sovereignty [61].

At the underlying infrastructure level, zero-trust architectures have revolutionized healthcare cybersecurity by implementing continuous verification, highly segmented access, and rigorous endpoint monitoring. The U.S. catalyzed the implementation of this approach following the issuance of Executive Order 14028, which emphasized the need to protect essential systems during national digital modernization efforts. Furthermore, new nations encounter interoperability challenges when integrating mobile health (mHealth) applications into their national electronic health records.

The national digital health strategy of Botswana highlights the promise and challenges of interoperability between decentralized mobile tools and centralized healthcare delivery structures [62][63].

Figure 3. Federated Learning vs Centralized AI for Health Data.

This figure illustrates how AI algorithms process patient data from wearable sensors and electronic health records (EHRs) to generate personalized insights, which are then relayed back to both the patient and the monitoring devices in a continuous feedback loop.

7. Implementation Challenges

Despite advances in technological standards and digital health innovation, numerous ongoing issues continue to hinder the scalable deployment of interoperable digital therapeutics and uHealth systems.

7.1 Technical Barriers

The lack of shared APIs and differing data formats among systems remains a fundamental barrier to interoperability. Ndlovu et al. in Botswana documented significant difficulty in integrating mHealth apps with national eRecord systems owing to different system architectures and inconsistent use of HL7 standards [63]. The continued use of proprietary data models further hinders the sharing of structured clinical content among institutions.

Mulukuntla highlighted that semantic interoperability issues stemming from various terminologies, such as ICD, SNOMED CT, and LOINC, lead to interpretation errors during data integration efforts, thereby weakening care continuity and clinical decision support tools [64].

Hryciw et al. pointed out that without the proper integration of AI tools into clinical workflows, usability failures and semantic mismatches may hinder appropriate decision-making and interoperability outcomes [56].

7.2 Policy and Regulatory Gaps

Policy misalignment across jurisdictions delays digital transformation. In LMICs, fragmented regulations and limited enforcement capacity often prevent the scaling of digital health pilots [65].

Ciecierski-Holmes et al.'s argument is that poor governance, vagueness regarding accountability, and the inability to provide clear policy guidelines on AI contribute to inconsistencies and inequalities within LMICs. [65]

Kaushik et al. noted that challenges within data-sharing protocols, most significantly those without clearly defined consent models and common data infrastructure, seriously limit the efficacy of AI solutions [66].

7.3 Constraints on Equity and Access

Digital health equity is compromised by urban–rural infrastructure gaps, broadband deficits, and a digital literacy shortage. As the World Health Organization has noted, rural and underserved healthcare infrastructure often lacks a reliable source of power, limited internet access, and hardware deficits, which hinder the large-scale use of interoperable health data systems [67].

Ahmed et al. highlighted that digital tools have the potential to narrow healthcare coverage gaps; however, they need to be designed appropriately and scaled to effectively reach populations that are currently underserved, especially in strengthening primary care settings [68].

7.4. Ethical Governance and Global Equity

The World provides detailed guidance on the ethical governance of AI, emphasizing responsibility, transparency, and adherence to human rights [69]. Ho CWL argues that equitable AI governance should consider the requirements for benefit-sharing, particularly in global health contexts where LMICs are likely to contribute to large-scale datasets without any leverage over innovation channels [69][70].

Aerts and Bogdan-Martin recommended an ecosystem of coordinated governance that involves global stakeholders approving country-level data stewardship, thereby upholding trust and sustainability within the digital health ecosystem [71].

7.5. Stakeholder Adoption Barriers

Resistance among clinicians, stemming from an increased documentation load, the usability of new interfaces, and inadequate training, remains a significant inhibitor. According to Bernardi et al., a lack of engagement among end users during the design and implementation of plans tends to lead to weak system adoption and delays in incorporating the system into clinical workflows [71]. He also emphasized that a lack of intuitive design in EHRs is one reason clinicians are not satisfied, especially when interoperability complicates everyday tasks [72]. Gomis-Pastor et al. cautioned that a lack of clinical validation and blurred lines between developers and clinicians reduce the likelihood of successful technology implementation [73].

8. Policy Evaluation and Recommendations

8.1. TEFCA: Aspirations vs. Adoption

The Trusted Exchange Framework and Common Agreement (TEFCA) aims to standardize and coordinate national-level health information exchange within the United States. However, its voluntary nature limits its enforcement and adoption through health information networks. Adler-Milstein et al. attest that TEFCA implementation was inconsistent due to voluntary participation, complexity among smaller systems, and uncertainties regarding the motivations of stakeholders [74]. Furthermore, TEFCA's current infrastructure has limited native capability to support advanced use cases, such as AI-powered secondary analytics, raising doubts about its readiness to confront new digital therapeutic ecosystems.

To increase adoption, Adler-Milstein et al. recommended enhancing TEFCA by providing more accurate metrics on interoperability performance and wider utilization across clinical, administrative, and research domains [74].

8.2. GDPR: Innovation vs Data Minimization

Although the General Data Protection Regulation (GDPR) provides rigorous protection for personal data, its data minimization and purpose limitation principles are incompatible with AI-powered Healthcare, which relies on large, multipatient, multisource datasets. This dichotomy was identified by Hussein et al., who noted that the use of secondary data—a significant capability required to execute predictive algorithms and population health analyses—is hindered by the GDPR [7]. Therefore, the result is a regulatory bottleneck that prevents AI's full potential from reaching FHIR-based interoperability and multi-stakeholder analytics, unless consent-based models of governance are adopted.

8.3. WHO Digital Health Strategy

The WHO Global Strategy on Digital Health (2020–2025) advocates for inclusive and interoperable digital ecosystems. However, LMICs still face numerous barriers, including weak infrastructure, fragmented governance, and inadequate regulatory enforcement [67]. Kaushik et al. reported that, despite the WHO's top-level policy, information sharing within LMICs remains hindered by divergent legal frameworks and weak health IT ecosystems [75][76].

Faridoon and Kechadi believe that the introduction of healthcare governance frameworks, developed on the principles of security, modular system designs, and transparency, will align the compliance mandates of LMICs with AI tools [77].

To help bridge current gaps, a specially designed modular interoperability model was introduced to accelerate implementation and incrementally scale up the components.

8.4. Actionable Governance Models

Growing evidence supports consent-based data-sharing architectures that strike a balance between usability and regulatory compliance. For example, federated analytics architectures embedded with zero-trust protocols offer a pathway to strike a balance between AI's data needs and the requirements of the GDPR. These types of architectures de-identify patient data, allowing for local training while maintaining traceability and auditability. Swathi et al. maintained that architectural frameworks and governance structures will chart a future-proof healthcare data infrastructure [78].

Kim et al. also recommend adopting proactive policy frameworks that visualize disruptive innovation and chart precise implementation channels for digital health ecosystems [79]. Thacharodi et al. also recommended incorporating newer technologies such as blockchain, AI, and telehealth on a system level to anticipate next-generation care [80].

9. Conclusion and Future Directions

This review combines multidimensional aspects of digital health interoperability by examining global standards, such as HL7 FHIR, governance frameworks, including TEFCA and GDPR, and transformative enablers, including AI, federated learning, and blockchain. These frameworks align to form the infrastructure for digital therapeutics and ubiquitous health (uHealth). Wide implementation remains hindered by regulatory misalignment, technological fragmentation, and differential digital infrastructure, particularly among low- and middle-income countries.

True digital transformation in uHealth must extend beyond the implementation of technology. As reiterated, the success of interoperability requires the integration of rights-based governance, context-aware regulation, and inclusive collaboration by all stakeholders. The adoption of policy templates or standards alone will not be effective unless they are combined with Ethical Design, semantic precision, and governable models.

Going ahead, achieving balanced and resilient global digital health ecosystems will require:

- Module-based, right-aware architectures that flexibly adjust to diverse requirements within the health system and protect the rights of individuals.

- Rigorous clinical validation of AI-directed interventions to ensure generalizability to diverse demographic and institutional settings.

- Mass investment by LMICs, particularly in rural or underserved regions, where interoperability does not work today.

- Federated and zero-trust analytics architectures enable decentralized learning without loss of data sovereignty or security.

Its success in the years ahead will not be solely based on technological innovation, but rather on trust in institutions, legal predictability, and international collaboration. For a world facing pandemics, health inequalities, and chronic disease burdens, building interoperable digital ecosystems is not an option; it is imperative to deliver sustainable, equitable, and people-focused care.

10. Limitations and Future Research

Although this review provides an extensive synthesis of interoperability frameworks within digital therapeutics and uHealth systems, certain limitations must be considered.

First, the review was conducted using a narrative rather than a systematic meta-analysis. Although several databases and consultations with subject matter experts were considered, selection bias and the potential for overlooked grey literature remain.

Second, evidence on technologies, such as blockchain, federated learning, and AI-based CDS tools, is provided by peer-reviewed case reports and preliminary adoption studies. The technologies currently exist only at the proof-of-concept or pilot stage, particularly among LMICs, and therefore, limit the generalizability of the evidence.

Third, interoperability challenges vary significantly by context, with infrastructure maturity, legal jurisdictions, and institutional readiness all exhibiting considerable variance. Therefore, the feasibility of the suggested governance models (such as TEFCA-like arrangements or WHO-led approaches) may need to be further localized and field-tested.

Subsequent research should endeavour to:

- Conduct comparative evaluations of interoperability implementations across diverse health systems, particularly in LMICs;

- Explore modular, low-resource architectures for HL7 FHIR adoption that are culturally and contextually appropriate

- The scalability and moral appropriateness of AI software on federated and zero-trust architectures are experimentally validated.

- Examine clinician adoption and usability patterns within system changes using mixed-method designs

- Develop sound assessment frameworks that account for the real-world impact on health equity and result from interoperability reforms.

These guidelines are crucial in facilitating the development of secure, inclusive, and internationally sustainable digital health ecosystems.

References

- Gazzarata, R.; Almeida, J.; Lindsköld, L.; Cangioli, G. HL7 Fast Healthcare Interoperability Resources (HL7 FHIR) in Digital Healthcare Ecosystems for Chronic Disease Management: Scoping Review. J. Med. Inform. 2024, 185, 105507. https://doi.org/10.1016/j.ijmedinf.2024.105507.

- Abernethy, A.P.; Adams, L.E.; Barrett, M.; Bechtel, C.; Brennan, P.; Butte, A.J.; et al. The Promise of Digital Health: Then, Now, and the Future. NAM Perspect. 2022, 6(22). https://doi.org/10.31478/202206e.

- Sharma, A.; Harrington, R.A.; McClellan, M.; Turakhia, M.P.; Eapen, Z.J.; Steinhubl, S.R.; et al. Using Digital Health Technology to Better Generate Evidence and Deliver Evidence-Based Care. Am. Coll. Cardiol. 2018, 71(23), 2680. https://doi.org/10.1016/j.jacc.2018.03.523.

- Alawiye, T.R. The Impact of Digital Technology on Healthcare Delivery and Patient Outcomes. E-Health Telecommun. Syst. Netw. 2024, 13(2), 13. https://doi.org/10.4236/etsn.2024.132002.

- Sinha, R.K. The Role and Impact of New Technologies on Healthcare Systems. Health Syst. 2024, 3(1). https://doi.org/10.1007/s44250-024-00163-w.

- Elysee, G.; Herrin, J.; Horwitz, L.I. An Observational Study of the Relationship between Meaningful Use-Based Electronic Health Information Exchange, Interoperability, and Medication Reconciliation Capabilities. Medicine (Baltimore) 2017, 96(41), e8274. https://doi.org/10.1097/MD.0000000000008274.

- Armoundas, A.A.; Ahmad, F.S.; Bennett, D.A. Data Interoperability for Ambulatory Monitoring of Cardiovascular Disease: A Scientific Statement from the American Heart Association. Genom. Precis. Med. 2024. https://doi.org/10.1161/HCG.0000000000000095.

- Salunkhe, V. Transforming Healthcare Research with Interoperability: The Role of FHIR and SMART on FHIR. In Handbook of Research on Healthcare Administration and Management; IGI Global: Hershey, PA, USA, 2024. https://doi.org/10.4018/979-8-3693-5523-7.ch007.

- Barker, W.; Chang, W.; Everson, J.; Gabriel, M. The Evolution of Health Information Technology for Enhanced Patient-Centric Care in the United States: Data-Driven Descriptive Study. Med. Internet Res. 2024. https://doi.org/10.2196/59791.

- Abbasi, A.B.; Layden, J.; Gordon, W.; Gregurick, S. A Unified Approach to Health Data Exchange: A Report from the US DHHS. JAMA 2025. https://doi.org/10.1001/jama.2025.0068.

- Zhang, X.; Saltman, R. Impact of Electronic Health Record Interoperability on Telehealth Service Outcomes. JMIR Med. Inform. 2022, 10(1), e31837. https://medinform.jmir.org/2022/1/e31837/.

- Nyangena, J.; Rajgopal, R.; Ombech, E.; Oloo, E.; Luchetu, H.; Wambugu, S.; et al. Maturity Assessment of Kenya's Health Information System Interoperability Readiness. BMJ Health Care Inform. 2021, 28(1). https://doi.org/10.1136/bmjhci-2020-100241.

- Aziz, S.U.A.; Askari, M.; Shah, S.N. Standards for Digital Health. In Handbook of eHealth Standards; Elsevier BV, 2020; p. 231. https://doi.org/10.1016/b978-0-12-817485-2.00017-1.

- Torab-Miandoab, A.; Samad‐Soltani, T.; Jodati, A.; Rezaei‐Hachesu, P. Interoperability of Heterogeneous Health Information Systems: A Systematic Literature Review. BMC Med. Inform. Decis. Mak. 2023, 23, 15. https://doi.org/10.1186/s12911-023-02115-5.

- Enticott, J.; Johnson, A.; Teede, H. Learning Health Systems Using Data to Drive Healthcare Improvement and Impact: A Systematic Review. BMC Health Serv. Res. 2021, 21(1), 200. https://doi.org/10.1186/s12913-021-06215-8.

- McMurry, A.J.; Gottlieb, D.I.; Miller, T.A.; Jones, J.R.; Atreja, A.; Crago, J.; et al. Cumulus: A Federated Electronic Health Record-Based Learning System Powered by Fast Healthcare Interoperability Resources and Artificial Intelligence. Am. Med. Inform. Assoc. 2024, 31(8), 1638–1647. https://doi.org/10.1093/jamia/ocae130.

- Szarfman, A.; Levine, J.G.; Tonning, J.M.; Weichold, F.; Bloom, J.C.; Soreth, J.M.; et al. Recommendations for Achieving Interoperable and Shareable Medical Data in the USA. Med. 2022, 2(1). https://doi.org/10.1038/s43856-022-00148-x.

- Mandel, J.C.; Kreda, D.A.; Mandl, K.D.; Kohane, I.S.; Ramoni, R.B. SMART on FHIR: A Standards-Based, Interoperable Apps Platform for Electronic Health Records. Am. Med. Inform. Assoc. 2016, 23(5), 899–908. https://doi.org/10.1093/jamia/ocv189.

- Apple Inc. Using HealthKit to Access Clinical Records with FHIR. Developer Documentation 2023. https://developer.apple.com/documentation/healthkit.

- Tabari, P.; Costagliola, G.; De Rosa, M. State-of-the-Art Fast Healthcare Interoperability Resources (FHIR)-Based Data Model and Structure Implementations: Systematic Scoping Review. JMIR Med. Inform. 2024, 12(1), e58445. https://doi.org/10.2196/58445.

- D’Amore, J.D.; Sittig, D.F.; Ness, R.B.; et al. App-Enabled EHRs: Benefits and Challenges of SMART on FHIR Apps. Med. Syst. 2018, 42(9), 165. https://doi.org/10.1007/s10916-018-1017-8.

- Bodenreider, O. The Unified Medical Language System (UMLS): Integrating Biomedical Terminology. Nucleic Acids Res. 2004, 32(Database issue), D267–D270. https://doi.org/10.1093/nar/gkh061.

- Schadow, G.; Mead, C.N.; McDonald, C.J. The HL7 Reference Information Model Under Scrutiny. Health Technol. Inform. 2001, 84(Pt 1), 133–137. https://pubmed.ncbi.nlm.nih.gov/17108519/.

- Giannangelo, K.; Millar, J. Mapping SNOMED CT to ICD-10. Health Technol. Inform. 2012, 180, 83–87. https://pubmed.ncbi.nlm.nih.gov/22874157/.

- Fung, K.W.; Xu, J.; Bodenreider, O. The New International Classification of Diseases 11th Edition: A Comparative Review with ICD-10 and ICD-10-CM. Am. Med. Inform. Assoc. 2020, 27(5), 738–746. https://doi.org/10.1093/jamia/ocz174.

- Martínez-Costa, C.; Menárguez-Tortosa, M.; Fernández-Breis, J.T. An Ontology-Based Approach for Representing Clinical Information in SNOMED CT and FHIR. Biomed. Semant. 2015, 6, 35. https://doi.org/10.1186/s13326-015-0026-2.

- S. Office of the National Coordinator for Health IT (ONC). Trusted Exchange Framework and Common Agreement (TEFCA) Overview. Available online: https://www.healthit.gov/tefca (accessed on 11 July 2025).

- Wager, K.A.; Lee, F.W.; Glaser, J.P. Health Care Information Systems: A Practical Approach for Health Care Management, 5th ed.; Jossey-Bass: Hoboken, NJ, USA, 2022; p. 83; ISBN 9781119853879.

- Holmgren, A.J.; Adler-Milstein, J.; Apathy, N.C. Electronic Health Record Documentation Burden Crowds Out Health Information Exchange Use by Primary Care Physicians. Health Aff. (Millwood) 2024, 43(11). https://doi.org/10.1377/hlthaff.2024.00398.

- Cohen, I.G.; Mello, M.M. HIPAA and Protecting Health Information in the 21st Century. JAMA 2018, 320(3), 231–232. https://doi.org/10.1001/jama.2018.5630.

- Szarfman, A.; Levine, J.G.; Tonning, J.M.; et al. Recommendations for Achieving Interoperable and Shareable Medical Data in the USA. Med. 2022, 2(1). https://doi.org/10.1038/s43856-022-00148-x.

- Rigas, E.S.; Lagakis, P.; Karadimas, M.; Logaras, E. Semantic Interoperability for an AI-Based Applications Platform for Smart Hospitals Using HL7 FHIR. Methods Programs Biomed. 2024, 240, 107138. https://www.sciencedirect.com/science/article/pii/S0164121224001389.

- Marfoglia, A.; Nardini, F.; Arcobelli, V.A.; Moscato, S. Towards Real-World Clinical Data Standardization: A Modular FHIR-Driven Transformation Pipeline to Enhance Semantic Interoperability in Healthcare. Methods Programs Biomed. 2025, 238, 107095. https://www.sciencedirect.com/science/article/pii/S0010482525000952.

- Zhang, X. Healthcare Regulation and Governance: Big Data Analytics and Healthcare Data Protection; Jefferson Digital Commons, 2020. Available online: https://jdc.jefferson.edu/cgi/viewcontent.cgi?article=1003&context=jscpssp (accessed on 11 July 2025).

- Konnoth, C. AI and Data Protection Law in Health. In Research Handbook on Health, AI and the Law; Solaiman, B., Cohen, I.G., Eds.; Edward Elgar: Cheltenham, UK, 2024; pp. 111–129.

- Gilbert, S.; Mathias, R.; Schönfelder, A.; Wekenborg, M.; Steinigen-Fuchs, J.; Dillenseger, A.; et al. A Roadmap for Safe, Regulation-Compliant Living Labs for AI and Digital Health Development. Adv. 2025, 11(20). https://doi.org/10.1126/sciadv.adv7719.

- Amar, F.; April, A.; Abran, A. Electronic Health Record and Semantic Issues Using Fast Healthcare Interoperability Resources: Systematic Mapping Review. Med. Internet Res. 2024, 26(1), e45209. https://www.jmir.org/2024/1/e45209/.

- World Health Organization. Global Strategy on Digital Health 2020–2025; WHO: Geneva, Switzerland, 2021. Available online: https://www.who.int/publications/i/item/9789240020924 (accessed on 11 July 2025).

- Ricciardi Celsi, L.; Zomaya, A.Y. Perspectives on Managing AI Ethics in the Digital Age. Information 2025, 16(4), 318. https://doi.org/10.3390/info16040318.

- Dhinakaran, D.; Edwin Raja, S.; Ramathilagam, A.; Vennila, G.; Alagulakshmi, A. Ethical and Legal Challenges with IoT in Home Digital Twins. MethodsX 2025, 14, 103409. https://doi.org/10.1016/j.mex.2025.103409.

- Watson, A.; Collins, L.A.; Danan, R.; Walsh, E.; Sarkhel, K.K. Use of EHR to Support Pilot Aeromedical Certification. S. Dept. of Transportation [Internet] 2024 [cited 2025 Jul]. Available online: https://doi.org/10.21949/1529642.

- Topol, E.J. High-Performance Medicine: The Convergence of Human and Artificial Intelligence. Med. 2019, 25(1), 44–56. https://doi.org/10.1038/s41591-018-0300-7.

- Gilbert, S.; Mathias, R.; Schönfelder, A.; Wekenborg, M.; Steinigen-Fuchs, J.; Dillenseger, A.; et al. A Roadmap for Safe, Regulation-Compliant Living Labs for AI and Digital Health Development. Adv. 2025, 11(20). https://doi.org/10.1126/sciadv.adv7719

- Braunstein, M.L. Health Informatics on FHIR: How HL7's API is Transforming Healthcare; Springer: Cham, Switzerland, 2022. Available online: https://onesearch.nihlibrary.ors.nih.gov/permalink/01NIH_INST/15et3fj/alma991001345887304686 (accessed on 11 July 2025).

- Snyder, M.E.; Nguyen, K.A.; Patel, H.; Sanchez, S.L. Clinicians’ Use of Health Information Exchange Technologies for Medication Reconciliation in the U.S. Department of Veterans Affairs: A Qualitative Analysis. BMC Health Serv. Res. 2024, 24(1), 1–10. https://doi.org/10.1186/s12913-024-11690-w.

- Denney, K.B. Assessing Clinical Software User Needs for Improved Clinical Decision Support Tools [Internet]; Walden Dissertations and Doctoral Studies, 2015 [cited 2025 Jul]. Available online: https://scholarworks.waldenu.edu/dissertations/2562.

- Sarkar, I.N. Transforming Health Data to Actionable Information: Recent Progress and Future Opportunities in Health Information Exchange. Med. Inform. 2022, 31(1), 203–214. https://doi.org/10.1055/s-0042-1742519.

- Laster, R.J. Improving the Department of Veterans Affairs Medical Centers Electronic Health Record Implementation [Internet]; Walden Dissertations and Doctoral Studies, 2024 [cited 2025 Jul]. Available online: https://scholarworks.waldenu.edu/dissertations/12228.

- Holmgren, A.J.; Esdar, M.; Hüsers, J.; Coutinho-Almeida, J. Health Information Exchange: Understanding the Policy Landscape and Future of Data Interoperability. Med. Inform. 2023, 32(1), 184–194. https://doi.org/10.1055/s-0043-1768719.

- Wu, S.; Roberts, K.; Datta, S.; et al. Deep Learning in Clinical Natural Language Processing: A Methodical Review. Am. Med. Inform. Assoc. 2020, 27(3), 457–470. https://doi.org/10.1093/jamia/ocz200.

- Singhal, K.; Azizi, S.; Tu, T.; et al. Large Language Models Encode Clinical Knowledge. Nature 2023, 620(7974), 172–180. https://doi.org/10.1038/s41586-023-06291-2.

- Roberts, M.; Driggs, D.; Thorpe, M.; et al. Common Pitfalls and Recommendations for Using Machine Learning to Detect and Prognosticate for COVID-19 Using Chest Radiographs and CT Scans. Mach. Intell. 2021, 3(3), 199–217. https://doi.org/10.1038/s42256-021-00307-0.

- Goel, I.; Bhaskar, Y.; Kumar, N.; et al. Role of AI in Empowering and Redefining the Oncology Care Landscape: Perspective from a Developing Nation. Digit. Health 2025, 7, 1550407. https://doi.org/10.3389/fdgth.2025.1550407.

- Varnosfaderani, S.M.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11(4), 337. https://doi.org/10.3390/bioengineering11040337.

- Pathak, Y.; Greenleaf, W.; Metman, L.V.; et al. Digital Health Integration with Neuromodulation Therapies: The Future of Patient-Centric Innovation in Neuromodulation. Digit. Health 2021, 3, 618959. https://doi.org/10.3389/fdgth.2021.618959.

- Hryciw, B.N.; Fortin, Z.; Ghossein, J.; Kyeremanteng, K. Doctor-Patient Interactions in the Age of AI: Navigating Innovation and Expertise. Med. 2023, 10, 1241508. https://doi.org/10.3389/fmed.2023.1241508.

- Sheller, M.J.; Reina, G.A.; Edwards, B.; et al. Federated Learning in Medicine: Facilitating Multi-Institutional Collaborations without Sharing Patient Data. Rep. 2020, 10, 12598. https://doi.org/10.1038/s41598-020-69250-1.

- Gupta, A.; Dogar, M.E.; Zhai, E.S.; et al. Innovative Telemedicine Approaches in Different Countries: Opportunity for Adoption, Leveraging, and Scaling-Up. Med. Today 2019, 5. https://doi.org/10.30953/tmt.v5.160.

- Linn, L.A.; Koo, M.B. Blockchain for Health Data and Its Potential Use in Health IT and Health Care-Related Research. ONC White Paper [Internet] 2016 [cited 2025 Jul]. Available online: https://www.healthit.gov/sites/default/files/11-74-ablockchainforhealthcare.pdf.

- Vazirani, A.A.; O’Donoghue, O.; Brindley, D.; Meinert, E. Blockchain Vehicles for Efficient Medical Record Management. Blockchain Healthc. Today 2019, 2(1). https://doi.org/10.1038/s41746-019-0211-0.

- Williams, E.; Kienast, M.; Medawar, E.; Reinelt, J.; Merola, A.; Klopfenstein, S.; et al. A Standardized Clinical Data Harmonization Pipeline for Scalable AI Application Deployment (FHIR-DHP): Validation and Usability Study. JMIR Med. Inform. 2023, 11, e43847. https://medinform.jmir.org/2023/1/e43847.

- Vukotich, G. Healthcare and Cybersecurity: Taking a Zero Trust Approach. Health Serv. Insights 2023, 16, 11786329231187826. https://doi.org/10.1177/11786329231187826.

- Ndlovu, K.; Scott, R.E.; Mars, M. Interoperability Opportunities and Challenges in Linking mHealth Applications and eRecord Systems: Botswana as an Exemplar. BMC Med. Inform. Decis. Mak. 2021, 21, 1–9. https://doi.org/10.1186/s12911-021-01606-7.

- Mulukuntla, S. Interoperability in Electronic Medical Records: Challenges and Solutions for Seamless Healthcare Delivery. EIJMHS 2015, 1(1), 12–20. https://eijmhs.com/index.php/mhs/article/view/214.

- Ciecierski-Holmes, T.; Kahn, T.; Sharma, R.; Mejía, A.; Ravi, N. Artificial Intelligence in Low- and Middle-Income Countries: A Systematic Scoping Review. NPJ Digit. Med. 2022, 5, 104. https://www.nature.com/articles/s41746-022-00700-y.

- Kaushik, A.; Barcellona, C.; Mandyam, N.K.; Tan, S.Y. Challenges and Opportunities for Data Sharing Related to Artificial Intelligence Tools in Health Care in Low- and Middle-Income Countries: Systematic Review. Med. Internet Res. 2025, 27(1), e58338. https://www.jmir.org/2025/1/e58338/.

- World Health Organization. Assessing the Enabling Environment for Digital Health: A Guidance Tool for Countries with Limited Infrastructure; WHO: Geneva, Switzerland, 2023. Available online: https://cdn.who.int/media/docs/default-source/digital-health-documents/global-initiative-on-digital-health_executive-summary-31072023.pdf?sfvrsn=5282e32f_1 (accessed on 11 July 2025).

- Ahmed, S.; Bhattacharyya, S.; Jafri, A.; Essar, M.Y.; Beiersmann, C.; Mathur, A.; et al. Strengthening Primary Health Care through Digital Platforms: Closing the Universal Health Coverage Gap. Healthcare 2024, 13(9), 1060. https://www.mdpi.com/2227-9032/13/9/1060.

- World Health Organization. Ethics and Governance of Artificial Intelligence for Health: WHO Guidance; WHO: Geneva, Switzerland, 2021. Available online: https://iris.who.int/bitstream/handle/10665/341996/9789240029200-eng.pdf (accessed on 11 July 2025).

- Ho, C.W.L. Equitable Artificial Intelligence in Global Health: Benefit Sharing and Governance. Public Health 2022, 10, 768977. https://www.frontiersin.org/articles/10.3389/fpubh.2022.768977/full.

- Aerts, A.; Bogdan-Martin, D. Leveraging Data and AI to Deliver on the Promise of Digital Health. J. Med. Inform. 2021, 150, 104456. https://doi.org/10.1016/j.ijmedinf.2021.104456.

- Bernardi, L.; Ferrer, M.; Arias, J.; Castagna, D.; Ismailova, K. Human-Centered Design in Digital Health: Best Practices for Clinician Engagement in Digital Transformation. Health 2023, 9, 1–14. https://doi.org/10.1177/20552076231174338.

- Gomis-Pastor, M.; Berdún, J.; Borrás-Santos, A. Clinical Validation of Digital Healthcare Solutions: State of the Art, Challenges and Opportunities. Healthcare 2024, 12(11), 1057. https://www.mdpi.com/2227-9032/12/11/1057.

- Adler-Milstein, J.; Worzala, C.; Dixon, B.E. Future Directions for Health Information Exchange. In Advances in Health Informatics; Elsevier: Amsterdam, The Netherlands, 2023. https://doi.org/10.1016/B978-0-323-90802-3.00005-8.

- Hussein, R.; Griffin, A.C.; Pichon, A. A Guiding Framework for Creating a Comprehensive Strategy for mHealth Data Sharing, Privacy, and Governance in Low-and Middle-Income Countries (LMICs). Am. Med. Inform. Assoc. 2023, 30(4), 787–794. https://doi.org/10.1093/jamia/ocad016.

- Kaushik, A.; Barcellona, C.; Mandyam, N.K.; Tan, S.Y. Challenges and Opportunities for Data Sharing Related to Artificial Intelligence Tools in Health Care in Low-and Middle-Income Countries: Systematic Review. Med. Internet Res. 2025, 27(1), e58338. https://www.jmir.org/2025/1/e58338.

- Faridoon, A.; Kechadi, M.T. Healthcare Data Governance, Privacy, and Security—A Conceptual Framework. In AI for Smart Healthcare Systems; Springer: Cham, Switzerland, 2024. https://doi.org/10.1007/978-3-031-72524-1_19.

- Swathi, N.L.; Kavitha, S.; Karpakavalli, M. Healthcare Information Systems Management; IGI Global: Hershey, PA, USA, 2025. Available online: https://www.igi-global.com/viewtitle.aspx?titleid=357954 (accessed on 11 July 2025).

- Kim, H.; Kwon, I.H.; Chul, W. Future and Development Direction of Digital Healthcare. Inform. Res. 2021, 27(2), 95. https://doi.org/10.4258/hir.2021.27.2.95.

- Thacharodi, A.; Singh, P.; Meenatchi, R.; Ahmed, Z.; Kumar, R.R.S.; Neha, V.; et al. Revolutionizing Healthcare and Medicine: The Impact of Modern Technologies for a Healthier Future—A Comprehensive Review. Health Care Sci. 2024, 3(5), 329. https://doi.org/10.1002/hcs2.115.